An Inside View of your Competitors’ Spend on Media

I am sure you have wondered about the amounts your competitors’ spend on social platforms to boost their posts or tweets. You would also have wondered what media spend strategy you should use in response.

What if we said, you can understand the media spends on competitive pages/handles, in real time? Sounds promising? You bet!

The true measure of any media planning exercise lies in the influence one is able to have over their target audience. If you have set out to achieve a specific Share of Voice (SOV) or Share of Engagement (SOE) for your brand, you should consider the options of boosting your Facebook posts or promoting your tweets to achieve your media goals.

Directing your media spend towards boosting your posts on social media is an effective way to reach a larger audience, increase social engagement, get more followers and drive direct-response conversions. There’s a method to this. Read on.

Take Stock

At the outset, decide what your targets for SOV and SOE should be. For example, you may want a third of the possible reach and engagement amongst your audience to be attributable to your brand. Measure your standing in the existing landscape and consider your current SOV and SOE to check whether you are reasonably close to the targeted goal.

- If the gap from your stated goal is insignificant, implying that you are already on track towards achieving your SOV, SOE goals without incremental media $s, you are good. This will leave you free to spend your media budget on other activities.

- It is likely that this may not be the case, as most brands today find that organic reach for their pages is being reduced to single digit percentages of the community size. If you happen to find that your SOV and SOE are lagging, you would do well to understand how to drive these metrics up to the desired level.

Decide on what spend is right

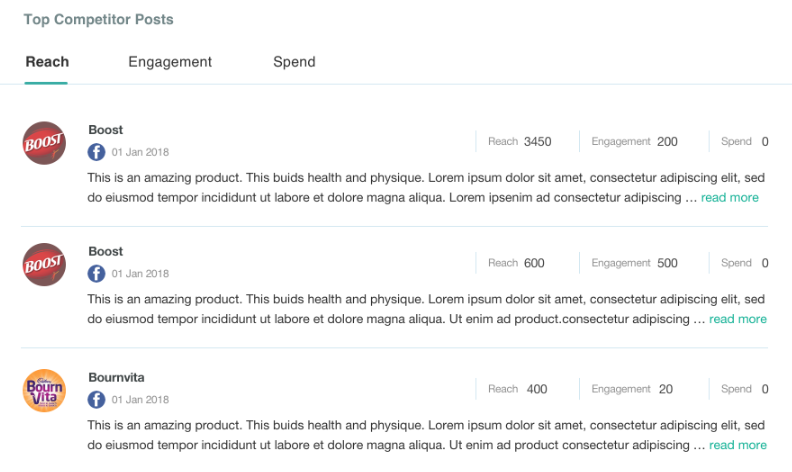

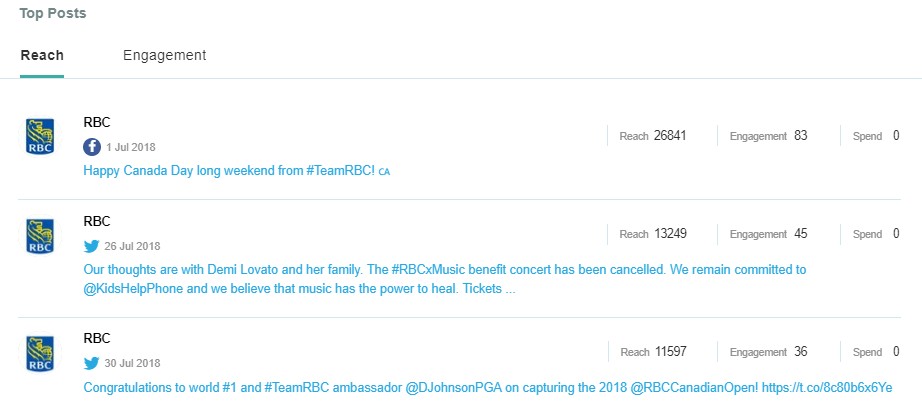

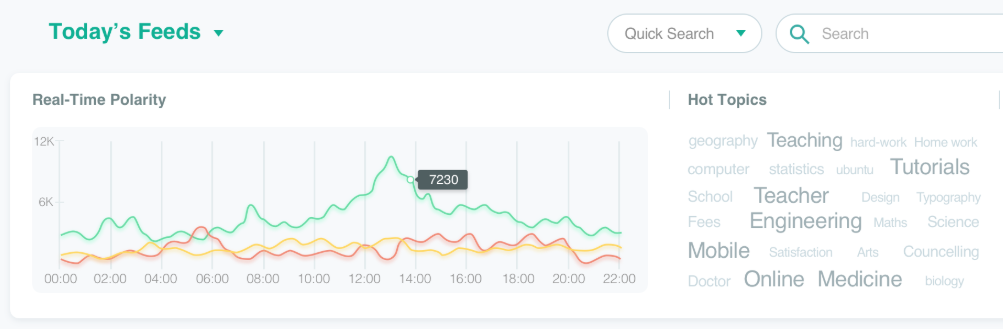

- Estimate how much your competitors spend on boosted posts and tweets and what SOV, SOE they have achieved. Auris can help you here.

- Back calculate to see what you may need to spend on boosted posts to achieve the right SOV and SOE against your competition.

- Keep a constant track each month and quarter to check if your assumptions on competitive spends have changed.

By pegging your strategy to the results obtained by your competitors, you can make sure that your $$ are spent wisely and well, with a superior return on investment (vs. deciding on spends in an ad hoc manner). Sign up for a free 14 day trial of Auris and see this in practice!

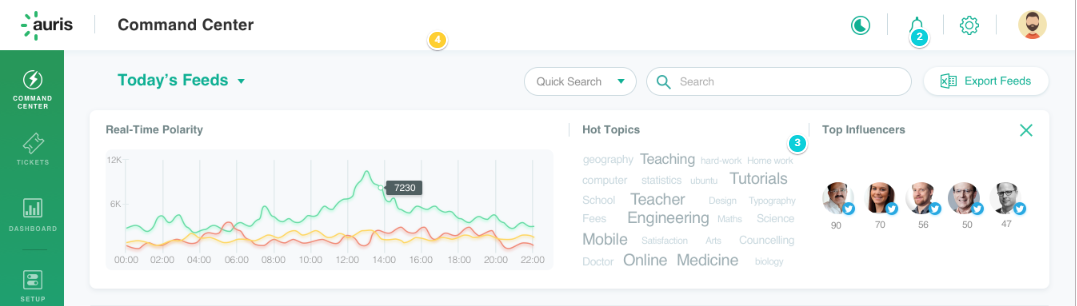

3. Identify common concerns and address them through educative blogs or posts

3. Identify common concerns and address them through educative blogs or posts 4. Ride the wave of trending topics

4. Ride the wave of trending topics 5. Closely watch how your competitors are performing

5. Closely watch how your competitors are performing