An Incredible Alternative to Questionnaire-based Research

Questionnaire-based research methodologies have their own well-known limitations. It is tragic but true, that we realize this fact only in retrospect, after we spent tens of thousands of dollars! Most often, they are derailed by the use of either the wrong sample or the wrong questions, or both. What if there was no such limitation? What if we could structure quantitative studies in the same open-ended manner that we deploy in qualitative research and still derive the quantitative insights we wanted? We explore the limitations of questionnaire-based methods and propose a possible solution.

The practices of market research might seem simple to the lay person. All it involves is this: We determine/choose a sample of respondents, present them with a pre-designed questionnaire to which we elicit their answers, collate them and extrapolate the insights offered to a larger audience and publish our findings. This may all seem very simple, but the reality is that market research is an involved science. Designing the questionnaire, selecting the sample of respondents and administering the questionnaire are all as scientifically determined as the methods applied in running a credible statistical analysis on the results. Each one of these steps offer multiple nuances, on account of the multiple options and methods involved in the process.

The result of such complexity and nuances in market research methods is that not all studies are successful. How many times have we questioned the results after an expensive study? There are several things which could have gone wrong.

Let’s look at some potential sources of such errors in a questionnaire-based survey. You’d understand that these limitations and biases impact consumer research more frequently than one would imagine:

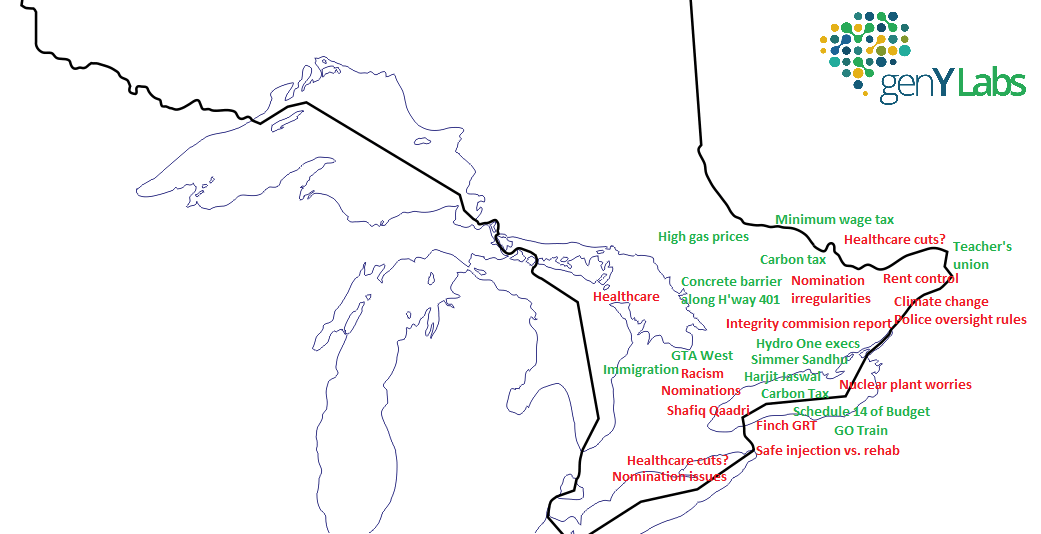

- Selecting the population: The sampling frame selected and the weightages attributed to extrapolate it to a larger group may not truly represent the population. We see this error particularly affecting the prediction of election outcomes. Collecting the opinions of the wrong crowd is bound to result in wrong findings.

- Biased Questionnaire: The researcher’s own point-of-view could creep into the way the questions are designed and the answers are interpreted or analyzed. This error is not measurable and can be very subtle or quite purposeful in the way it could cause the results to go wrong. Even senior researchers fall prey to this error, mainly because opinions about some topics, like religion, secularism, marriage, discipline or education (to name a few), are strongly entrenched for most people.

- Not from a Random Sample: The very act, of distributing a questionnaire and collecting responses to it, makes the possibility of obtaining a random sample difficult, which affects the reliability of the statistically derived predictions. To illustrate, the very fact that all are willingly agreeing to fill out the questionnaire makes the survey non-random, but it can’t ever be fixed because the ones who refused to fill it out will not change their minds anyway. People who are offered incentives to take a survey could be biased.

- Data not Weighed: It is important to weigh the data collected by the sub-group which willingly agreed to cooperate and respond to the questionnaire. Sometimes, surveyors fail to collect or incorporate this information, like gender or ethnicity, to weight the data and compensate for its effect on the predictions made based on the survey responses. Another way to control this error would be to quota the sample by deciding how many people belonging to each sub-group will participate in the survey.

- Over-correction: When faced with concerns about possible errors and biases creeping into one’s data, researchers tend to over-correct it for every imaginable error. This could potentially skew the characteristics of the data and make it lose its capability to represent the larger population.

- Miscellaneous:

- Closed-ended questions have a pattern in responses, as respondents go by primacy and recency which weigh in for the first and last options.

- The order in which questions are posed could influence the responses.

- Leading/pointed questions, however subtle, impact the results obtained.

- Saying ‘Yes/Agree’ comes easier than saying ‘No/Disagree’.

Research is still exploring ways and means to correct these errors and biases which could creep into one’s survey. Informed opinion asserts that an excellent alternative to a questionnaire-based survey would be collecting the voluntary responses of consumers, given without any prompting or incentive, making social listening important for market research.

What if there was no need to set out with a set questionnaire? Just like in qualitative research, but then with a sample large enough to enable us to derive quantitative insights? What if we could eliminate, to some extent, such biases from impacting our research?

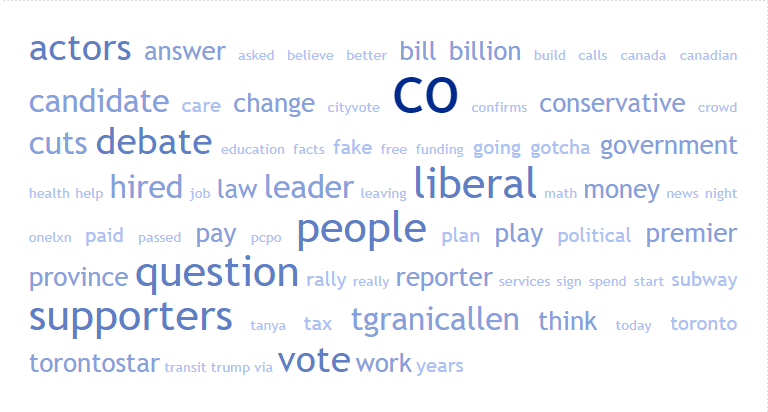

The answer to these “what ifs” is a method where one could have access to an infinite set of consumer views which could be mined to understand their possible responses to multiple questions. Large data sets of, voluntarily offered, consumer opinion help remove the constraints on the number of ways we could slice and dice the sample of our choice, answering a hundred different questions.

Auris, our AI-powered consumer insights tool is an attempt to make this wish come true. With Auris you have an incredibly large sample of all consumer feedback which they have expressed of their own volition. A researcher can now look at the cohorts of their choice and seek answers to as many questions as come to mind. Your research therefore yields trustworthy results because you gain the ability to listen to an unlimited amount of feedback in real time, from a random selection of people who voluntarily provide it to you.